Get into enable mode and do the following.

#CISCO ASAV SUBINTERFACE NATIVE VLAN HOW TO#

First let’s look at how to do it on an HP Procurve switch. The name of the file (ifcfg-eth0.10) MUST match the DEVICE= parameter.ĭon’t forget to restart networking on the server to make sure the changes take effect: 10 on the end of the DEVICE=eth0 specifies which VLAN tag this interface will process traffic for. “VLAN=yes” tells linux to load the 802.1q kernel module, and the. Notice the inclusion of a new parameter, “VLAN=yes”, as well as the new syntax for the device name. This new file is called “ifcfg-eth0.10” and looks like this: Next we’ll add the subinterface (or VLAN interface) by creating a new config file that will live in the same directory as ifcfg-eth0 (/etc/sysconfig/network-scripts). The base interface (eth0) is configured as it normally is. Notice that there is no special configuration yet. Here’s what mine looks like (without the x’s of course):

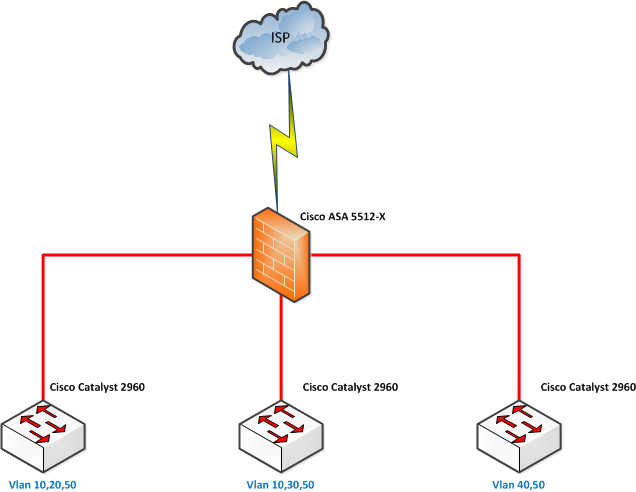

It should be in /etc/sysconfig/network-scripts/ifcfg-eth0. Since we use RHEL on all of our clusters, that’s what I’ll show.įirst, edit the config file for interface eth0. We’ll start with the server configuration. In this post I’ll demonstrate how it’s done. This enables us to use two VLANs on the same port and still specify the traffic for one of them as “untagged”. In our case, we run one VLAN to the server as a regular access-mode VLAN, and we also run a second VLAN to the server as a tagged VLAN. We do it with both Cisco and HP Procurve switches. To solve this problem, we use linux subinterfaces (or vlan interfaces) on the server and 802.1q VLAN tagging on the switches. In addition, the interactive nodes are NFSRoot booted, so they must operate in a plain-vanilla network environment. These interactive nodes need to have access to both networks, but we don’t like to burn two switch ports for each interactive node. Each cluster has an interactive node (or head node) that users have access to to submit jobs, do quick dev work, etc.

Normally, users never touch the compute nodes themselves, but rather, interact with them through the batch system. In our HPC environment, we use a private backend IP network to control cluster compute nodes, and a separate, public frontend IP network to give users access to the cluster.

0 kommentar(er)

0 kommentar(er)